AI Policy

- Introduction & Scope

The Blue Sky Creations Group is committed to maintaining the confidentiality, integrity, and availability of information assets, including those related to Artificial Intelligence (AI) systems development and provision.

Compliance with the New Zealand and Australia Privacy Acts, European General Data Protection Regulation (GDPR), United Kingdom GDPR, and more recently introduced, the European Union AI Act (Act) is a prerequisite.

This policy outlines the principles, responsibilities, and procedures that govern the management of information security within our organization, specifically addressing the requirements of the Act.

The policy applies to all employees, contractors, suppliers, and other parties working on behalf of our organisation. It encompasses all information assets, systems, processes, and activities related to AI systems development and provision, including but not limited to algorithm development, data processing, model training, and deployment.

- Reference documents

- Statement of Applicability

- ISMS Scope

- Privacy Policy

- General Data Protection Policy

- AI Act Introduction

The Act is a European Union regulation on artificial intelligence in the European Union. Proposed by the European Commission on 21 April 2021 and passed on 13 March 2024, it aims to establish a common regulatory and legal framework for AI.

Its scope encompasses all types of AI in a broad range of sectors (exceptions include AI systems used solely for military, national security, research, and non-professional purposes). As a piece of product regulation, it would not confer rights on individuals, but does regulate the providers of AI systems, and entities using AI in a professional context.

The Act was revised following the rise in popularity of generative AI systems such as ChatGPT, whose general-purpose capabilities present different stakes and did not fit the defined framework. More restrictive regulations are planned for powerful generative AI systems with systemic impact.

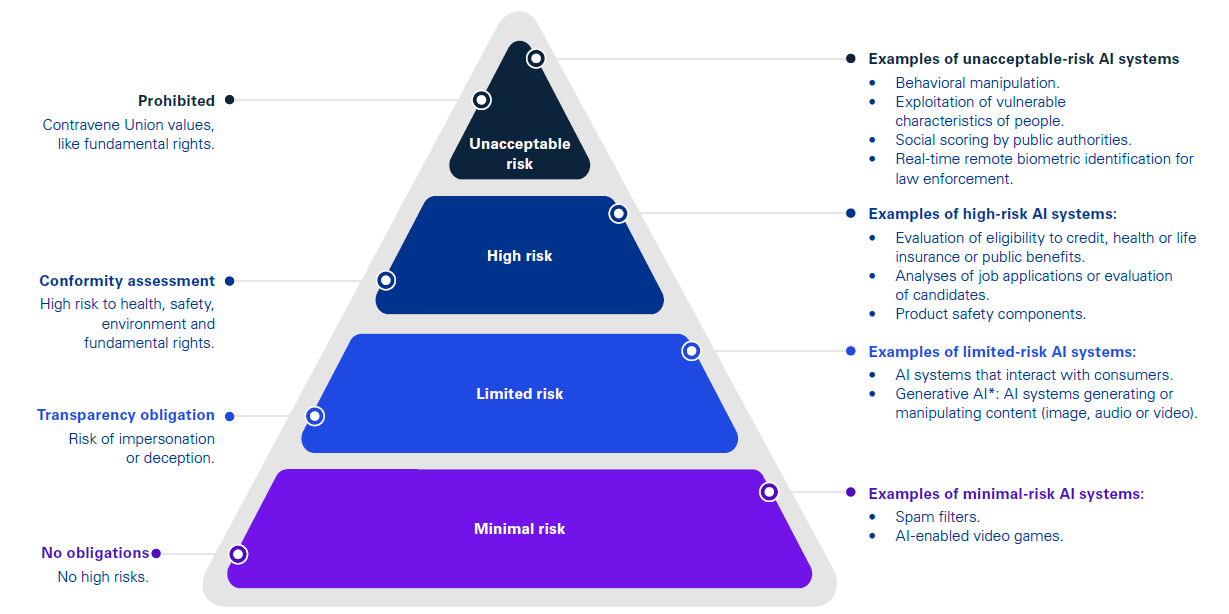

The Act aims to classify and regulate AI applications based on their risk to cause harm.

- Act Classifications

Classification includes four categories as follows.

- Unacceptable Risks – AI applications deemed to represent unacceptable risks are banned.

- High-Risk – AI applications must comply to security, transparency and quality obligations and undergo conformity assessments.

- Limited-Risk – AI applications only have transparency obligations.

- Minimal risk – AI applications are not regulated.

For general-purpose AI, transparency requirements are imposed, with additional and thorough evaluations when representing particularly high risks.

The Act further proposes the introduction of a European Artificial Intelligence Board to promote national cooperation and ensure compliance with the regulation.

- Risk Categories

There are different risk categories depending on the type of application, and one specifically dedicated to general-purpose generative AI.

- Unacceptable risk: AI applications that fall under this category are banned. This includes AI applications that manipulate human behaviour, those that use real-time remote biometric identification (including facial recognition) in public spaces, and those used for social scoring (ranking people based on their personal characteristics, socio-economic status or behaviour).

- High-risk: AI applications that pose significant threats to health, safety, or the fundamental rights of persons. Notably, AI systems used in health, education, recruitment, critical infrastructure management, law enforcement or justice. They are subject to quality, transparency, human oversight, and safety obligations, and in some cases a Fundamental Rights Impact Assessment is required. They must be evaluated before they are placed on the market, as well as during their life cycle. The list of high-risk applications can be expanded without requiring modifying the Act itself.

- General-purpose AI (“GPAI”): This category was added in 2023 and includes in particular foundation models like ChatGPT. They are subject to transparency requirements. High-impact general-purpose AI systems which could pose systemic risks (notably those trained using a computation capability of more than 102 FLOPS) must also undergo a thorough evaluation process.

- Limited risk: These systems are subject to transparency obligations aimed at informing users that they are interacting with an artificial intelligence system and allowing them to exercise their choices. This category includes, for example, AI applications that make it possible to generate or manipulate images, sound, or videos (like deepfakes). In this category, free and open-source models whose parameters are publicly available are not regulated, with some exceptions.

- Minimal risk: This includes for example AI systems used for video games or spam filters. Most AI applications are expected to be in this category. They are not regulated, and Member States are prevented from further regulating them via maximum harmonization. Existing national laws related to the design or use of such systems are disapplied. However, a voluntary code of conduct is suggested.

- Information Security Objectives

This Policy ensures our organisation achieves the following objectives.

- Compliance with the provisions of the Act, including but not limited to requirements related to data protection, transparency, accountability, and ethical use of AI systems.

- Protect the confidentiality, integrity, and availability of information assets, including AI algorithms, datasets, and proprietary information, in accordance with the principles and requirements of the Act.

- Minimize the risk of unauthorized access, disclosure, alteration, or destruction of information assets, particularly those containing sensitive or personal data used in AI systems.

- Implement appropriate technical and organizational measures to mitigate the risks associated with AI systems development and provision, including measures to address bias, fairness, interpretability, and accountability.

- Promote a culture of security awareness and accountability among employees and stakeholders involved in AI systems development and provision, emphasizing compliance with the Act and related policies and procedures.

- Information Security Responsibilities

Our organisation manages AI information security as follows.

- Management Commitment: Senior management is committed to ensuring that information security measures are implemented and maintained in compliance with the Act and other relevant laws, regulations, and standards.

- Information Security Coordinator: The Information Security Coordinator is responsible for overseeing the implementation and maintenance of information security measures related to AI systems development and provision, including compliance with the Act.

- Employees: All employees are responsible for complying with information security policies, procedures, and guidelines, particularly those related to AI systems development and provision. Employees involved in AI-related activities are expected to adhere to ethical principles and legal requirements outlined in the Act.

- Risk Management

Our organisation manages AI risk management as follows:

- Risk Assessment: Our organisation conducts regular risk assessments to identify, evaluate, and prioritize information security risks related to AI systems development and provision, considering the requirements of the AI Act.

- Risk Treatment: Based on the results of risk assessments, appropriate controls are implemented to mitigate identified risks to an acceptable level, including controls to address legal and regulatory requirements of the Act.

- Access Control

Our organisation controls access as follows:

- Access Rights: Access to AI systems, databases, and sensitive information is granted on a need-to-know basis and is regularly reviewed and updated as necessary to ensure compliance with the Act.

- Authentication and Authorization: Strong authentication mechanisms and access controls are implemented to ensure that only authorized individuals can access and modify information assets, particularly those containing sensitive or personal data used in AI systems.

- Security Awareness and Training

Our organisation undertakes the following:

- Security Awareness: We provide ongoing security awareness training to employees, contractors, and other relevant parties involved in AI systems development and provision to promote understanding of information security risks, ethical principles, and legal requirements outlined in the Act.

- Incident Response: Employees are trained to recognize and report security incidents promptly, including those related to potential violations of the AI Act, to facilitate timely response and resolution.

- Compliance

Our commercial and compliance team undertake the following:

- Legal and Regulatory Compliance: We compliance with the provisions of the Act, including requirements related to data protection, transparency, accountability, and ethical use of AI systems, as well as other relevant laws, regulations, and standards.

- Contractual Obligations: We ensure that contracts with clients, suppliers, and other relevant parties include appropriate information security requirements and provisions, particularly those related to AI systems development and provision and compliance with the Act.

- Monitoring and Review

Our organisation undertakes the following:

- Performance Monitoring: We regularly monitor the effectiveness of information security controls, processes, and practices related to AI systems development and provision through audits, reviews, and performance measurements, with specific attention to compliance with the Act.

- Management Review: Senior management conducts periodic reviews of the Information Security Management System (ISMS) to assess its continued suitability, adequacy, and effectiveness, including compliance with the AI Act, and to identify opportunities for improvement.

- Continual Improvement

Our organisation is committed to continually improving its information security management processes, controls, and practices related to AI systems development and provision, with a focus on enhancing compliance with the Act and addressing emerging threats and vulnerabilities.

- Document Control

This Policy is maintained, reviewed, and updated as necessary to ensure its continued relevance and effectiveness, particularly in relation to compliance with the Act and other legal and regulatory requirements.

- Policy Compliance

Failure to comply with this Policy, including its specific provisions related to compliance with the Act, may result in disciplinary action, up to and including termination of employment or contractual relationship, as well as legal consequences in cases of serious non-compliance.

- Validity and document management

This document is valid as of April 24, 2021.

The owner of this document is Chief Operations Officer, who must check and, if necessary, update the document every 12 months.